OLAP cubes use a dimensional model based on Facts and Dimensions. Cubes provide pre-processed aggregations based on available data values. If your cube is small (in the MB’s) and not heavily used, you may want to simply process your cube several times a day. An easy way to do this is by creating an SSIS package and using the ‘Analysis Services Processing Task’.

Proactive caching allows data to continually stream data into the cube. As data comes in, SSAS notices the new data and automatically rolls it into the cube. If you have a lot of small cubes, proactive caching is great because you can keep these cubes up-to-date and you don’t have to schedule a lot of SSIS packages to refresh them all. Cubes are typically data bound by the underlying data sources and their ETL. If you have a very efficient ETL, or no ETL at all, proactive caching may be a good choice for you since data will flow right into the cube.

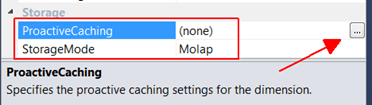

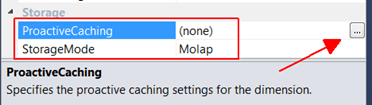

Ok, let’s take a look at how to enable proactive caching on your cubes. If we were to simply turn on proactive caching on the fact table(s) that link to a new dimension value, we could run into trouble. To avoid this we need to first turn on proactive caching on each dimension that could have new data coming in, or where data values may be updated. Go to each Dimension in the cube and go to the properties tab. Near the bottom, expand the Storage section. The default options are Molap and no proactive caching. Click the details button to pull up the Dimension Storage Settings window.

Click on Options.

Check the box on the top to ‘Enable proactive caching’. Feel free to look into the various settings and make adjustments how you like but I’m going to keep the defaults. The silence interval waits x amount of time since it last saw a data change before processing the SSAS dimension. In this case, it would wait 10 seconds after the last data change before it would go ahead and process the dimension. This is helpful if you are having a bunch of updates to the data source, SSAS won’t try to process the dimension every single time there is an update. The silence override interval means that if data has continually been changing for x amount of time (in this case 10 minutes), go ahead and process that dimension after 10 minutes and then continue to do the silence interval checks.

Next, click on the Notifications tab. On this tab we need to specify the source table or view for this dimension.

On this screen check the box that says ‘Specify tracking tables’ and click the button on the right. Select the source table or view for this dimension. This tells SSAS that when there is a data change on this source, update the cube dimension as well.

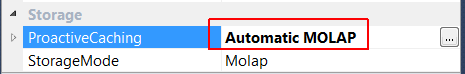

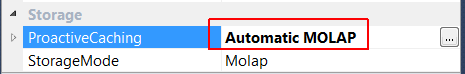

Note: As the message on the bottom indicates, in order for SSAS to notice data changes, the SSAS service account has to use an account with either System Administrator or ALTER TRACE privileges. For demo purposes, I set my SSAS service account to run as LocalSystem. Click ‘OK’ to exit this screen, and ‘OK’ on the Storage Options screen. Notice that the storage options on the properties tab now says ‘Automatic MOLAP’.

Continue to do this same step for each of your dimensions that could have data changes. Then do the same on your cube but be sure to select your Fact table data source (table or view). Now process and deploy your cube to SSAS and test it out. Connect to your cube from Excel and pull up some data. Try changing a value on your existing fact table in Management Studio. Wait 10 seconds, or whatever silence interval you specified, and refresh your excel data. You should now see the updated data, and we didn’t have to re-process anything! Next, try adding a new dimension record and a new fact record that uses the new dimension record. Again, after waiting 10 seconds and refreshing the data in Excel you should now have a new dimension record as well as a new fact record.

Proactive caching lets you continuously add new and updated data into your cube without having to schedule processing. Where proactive caching really comes in handy is when you have a lot of small cubes, or your cube is based on top of an OLTP system. If you have a lot of small cubes, with proactive caching you don’t have to a lot of cube refreshes with SSIS packages. Cubes are dependent on the underlying data and their ETL processes. If your cube sits directly on top of OLTP data, you are able to avoid the ETL bottleneck and proactive caching can help feed data automatically into your cube keeping it up-to-date.